They keep changing the SteamVR VIVE input system, most recently for the knuckles controller.

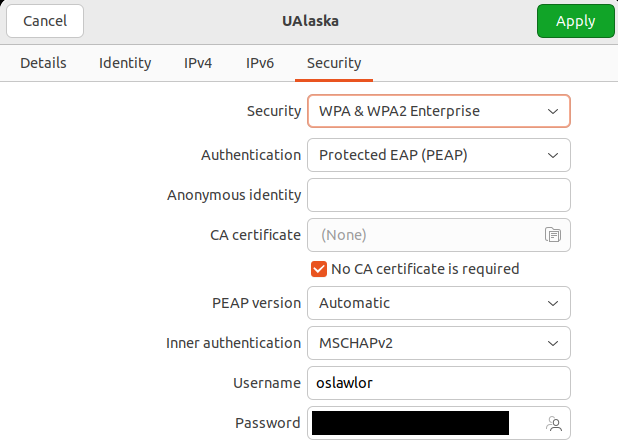

As of SteamVR 2.2, you the game programmer define the space of possible Actions in Unity “Window -> SteamVR Input”. The user is theoretically supposed to be able to customize these in SteamVR “Devices -> Controller Input Binding”, although you provide a JSON file of hopefully working defaults. You the programmer connect the user-visible Actions to your scripts in Unity Inspector, which seems like a lot of moving pieces to get right. (More “Linux gaming” than “console gaming”.)

For example, here’s some code to read a boolean Action in Unity 2018.3 via a new Project -> Assets -> Create -> C# Script:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Valve.VR;

public class DumpVR : MonoBehaviour

{

public SteamVR_Action_Boolean Teleport=SteamVR_Input.GetAction<SteamVR_Action_Boolean>("default", "Teleport");

void Start()

{

Debug.Log("Started DumpVR");

}

void Update()

{

if (Teleport.GetState(SteamVR_Input_Sources.Any))

{

Debug.Log("Teleport hit");

}

}

}

Add the SteamVR Plugin for Unity and drag up a SteamVR->Prefabs -> CameraRig to replace the default camera. VR head tracking should work already.

Add a Sphere (Scene -> right click -> 3D Object -> Sphere) and drag your new DumpVR script on top of your new sphere.

The first time you run this, you might get the following weird error when you try to GetState on your Action:

NullReferenceException: Object reference not set to an instance of an object

Valve.VR.SteamVR_Action_Boolean.GetState (Valve.VR.SteamVR_Input_Sources inputSource) (at Assets/SteamVR/Input/SteamVR_Action_Boolean.cs:94)

DumpVR.Update () (at Assets/VRtests/DumpVR.cs:17)

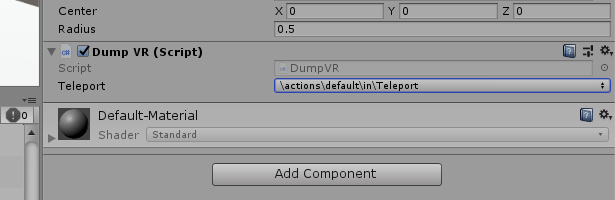

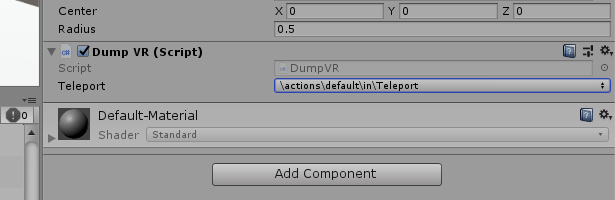

To make it work, you just need to select an already-defined action for this instance of the script. Look at where DumpVR is bound to your Sphere in the Unity Inspector:

For me that dropdown was initially set to “None”, causing that NullReferenceException error at runtime. (But sometimes it autofills correctly.)

That’s a button. For location transforms, I was able to pull the position and rotation (“pose”) from the left hand and change the containing object’s transform with:

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using Valve.VR;

public class HandyPoser : MonoBehaviour

{

public SteamVR_Action_Pose Poser=SteamVR_Input.GetAction<SteamVR_Action_Pose>("default", "Pose");

public SteamVR_Input_Sources source=SteamVR_Input_Sources.LeftHand;

void Start()

{

}

void Update()

{

Vector3 pos=Poser.GetLocalPosition(source);

Quaternion rot=Poser.GetLocalRotation(source);

Debug.Log("Left hand: pos "+pos+" rot "+rot);

transform.localPosition=pos;

transform.localRotation=rot;

}

}

There’s a bit more description at valve’s samples site, and hairier examples in Assets/SteamVR/InteractionSystem/Samples. The source code for the user-visible classes is in Assets/SteamVR/Input.